Locating All Plausible Region of Focus

Overview

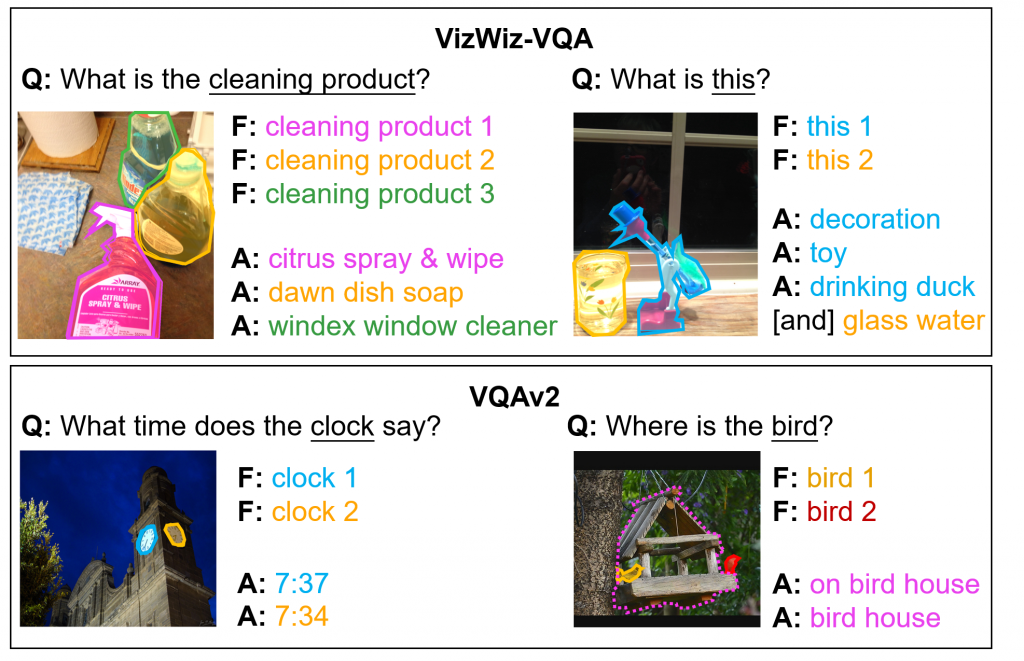

Visual Question Answering (VQA) is the task of predicting the answer to a question about an image. However, language in questions can sometimes be ambiguous, referring to multiple plausible visual regions in an image. We call this focus ambiguity in visual questions, or more concisely “ambiguous questions” and “question ambiguty”. We believe that a VQA system should notify users when there is question ambiguity and facilitate the user to arrive at the desired interpretation.

We publicly share the VQ-FocusAmbiguity dataset, the first dataset segmenting all plausible image regions to which the language in each question could refer. Success in developing ambiguity-aware solutions can immediately benefit today’s users of VQA services, spanning blind and sighted individuals, who already regularly ask visual questions using mobile phone apps (e.g., Be My AI, Microsoft’s Seeing AI), smart glasses (e.g., Meta’s Ray Bans, Envision AI), and the web (e.g., Q&A platforms such as Stack Exchange). It would enable AI agents to alert users when there is question ambiguity as well as interactively guide users towards disambiguating their intent by having them specify which from all plausible image regions the question is referring to.

VQ-FocusAmbiguity Dataset

The VQ-FocusAmbiguity dataset includes:

- 70 training examples

- 70 validation examples

- 5,360 test examples

- Annotation [Zip files]

Zip file Structure:

├── images/

│ ├── filename1

│ └── filename2

│ ...

├── masks_public/

│ ├── train/

│ │ ├── masks_id1

│ │ └── masks_id2

│ │ ...

│ └── val/

│ ├── masks_id1

│ └── masks_id2

│ ...

├── train_public.json

├── val_public.json

└── test_public.json- Each JSON annotation record has the following format:

{"set": "train",

"id": 0,

"masks_id": "00000000",

"file_name": "COCO_train2014_000000423710.jpg",

"question": "Where is the tissue?",

"label": "ambiguous"}

This work is licensed under a Creative Commons Attribution 4.0 International License.

Challenge

Our proposed challenge is designed around the aforementioned VQ-Focus Ambiguity dataset.

Task 1 – Recognizing Questions with Focus Ambiguity

Given a visual question (question-image pair), the task is to predict if a visual question has focus ambiguity. The submissions will be evaluated based on the F1 score. The team which achieves the maximum F1 score wins this challenge.

Task 2- Locating All Plausible Regions of Focus

Given a visual question (question-image pair), the task is to return all the regions in the image that could be the question’s focus. The submissions will be evaluated based on the mAP, unionIoU, and max IoU score across all test images. The team which achieves the maximum mAP score wins this challenge.

Submission Instructions

Evaluation Servers

Teams participating in the challenge must submit results for the test portion of the dataset to our evaluation servers, which are hosted on EvalAI. We created different partitions of the test dataset to support different evaluation purposes:

- Test-dev: this partition consists of 100 test visual questions and is available year-round. Each team can upload at most 10 submissions per day to receive evaluation results.

- Test-challenge: this partition is available for a limited duration before the Computer Vision and Pattern Recognition (CVPR) conference in June 2026 to support the challenge for the VQA task, and contains all 5,360 visual questions in the test dataset. Results on this partition will determine the challenge winners, which will be announced during the VizWiz Grand Challenge workshop hosted at CVPR. Each team can submit at most five results files over the length of the challenge and at most one result per day. The best scoring submitted result for each team will be selected as the team’s final entry for the competition.

- Test-standard: this partition is available to support algorithm evaluation year-round, and contains all 5,360 visual questions in the test dataset. Each team can submit at most five results files and at most one result per day. Each team can choose to share their results publicly or keep them private. When shared publicly, the best scoring submitted result will be published on the public leaderboard and will be selected as the team’s final entry for the competition.

Uploading Submissions to Evaluation Servers

To submit results, each team will first need to create a single account on EvalAI. On the platform, then click on the “Submit” tab in EvalAI, select the submission phase (“test”), select the results file (i.e., zip file) to upload, fill in required metadata about the method, and then click “Submit”. The evaluation server may take several minutes to process the results. To have the submission results appear on the public leaderboard, check the box under “Show on Leaderboard”.

To view the status of a submission, navigate on the EvalAI platform to the “My Submissions” tab and choose the phase to which the results file was uploaded (i.e., “test”). One of the following statuses should be shown: “Failed” or “Finished”. If the status is “Failed”, please check the “Stderr File” for the submission to troubleshoot. If the status is “Finished”, the evaluation successfully completed and the evaluation results can be downloaded. To do so, select “Result File” to retrieve the aggregated score for the submission phase used (i.e., “test”).

Submission Results Formats

Please submit a ZIP file with a folder inside containing all test results. Each result must be a binary mask in the format of a PNG file.

Mask images are named using the scheme VizWiz_test_<image_id>.png, where <image_id> matches the corresponding VizWiz image for the mask.

Mask images must have the exact same dimensions as their corresponding VizWiz image.

Mask images are encoded as single-channel (grayscale) 8-bit PNGs (to provide lossless compression), where each pixel is either:

0: representing the background of the image, or areas outside the answer grounding255: representing the foreground of the image, or areas inside answer the grounding

The foreground region in a mask image may be of any size (including the entire image).

Leaderboards

The Leaderboard page for the challenge can be found here.

Rules

- Teams are allowed to use external data to train their algorithms. The only exception is that teams are not allowed to use any annotations of the test dataset.

- Members of the same team are not allowed to create multiple accounts for a single project to submit more than the maximum number of submissions permitted per team on the test-challenge and test-standard datasets. The only exception is if the person is part of a team that is publishing more than one paper describing unrelated methods.

Publication

- Acknowledging Focus Ambiguity in Visual Questions

Chongyan Chen, Yu-Yun Tseng, and Danna Gurari. International Conference on Computer Vision (ICCV), 2025.Contact Us

For any questions, comments, or feedback, please send them to Everley Tseng at Yu-Yun.Tseng@colorado.edu or Chongyan Chen at chongyanchen_hci@utexas.edu.