Recognize the Presence and Purpose of Private Visual Information

Overview

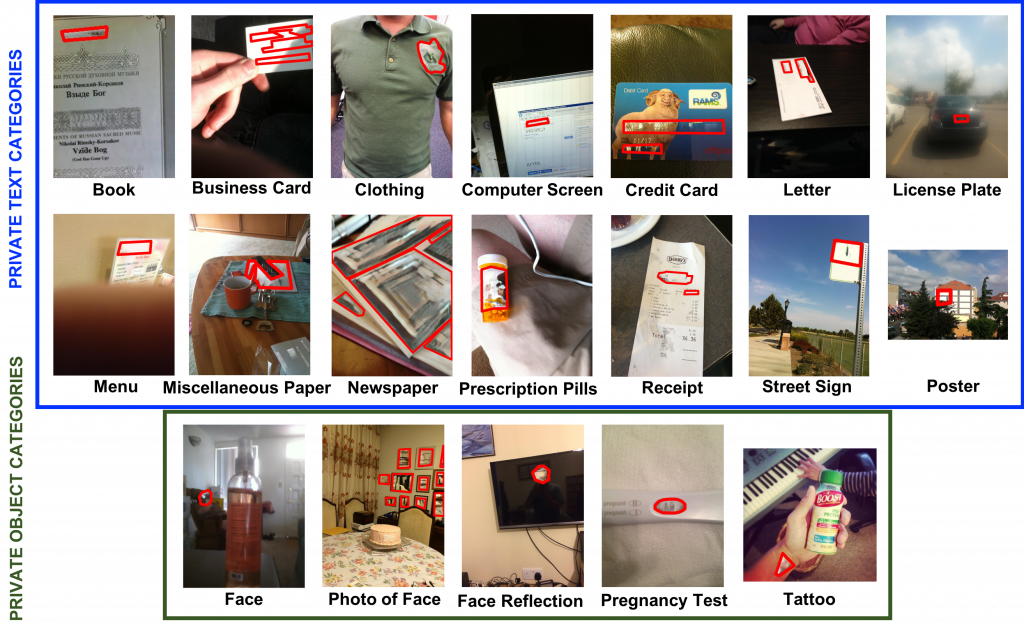

We introduce the first visual privacy dataset originating from people who are blind in order to better understand their privacy disclosures and to encourage the development of algorithms that can assist in preventing their unintended disclosures. For each image, we manually annotate private regions according to a taxonomy that represents privacy concerns relevant to their images. We also annotate whether the private visual information is needed to answer questions asked about the private images. These annotations serve as a critical foundation for designing algorithms that can decide (1) whether private information is in an image and (2) whether a question about an image asks about the private content in the image.

Dataset

VizWiz-Priv dataset includes:

- non-private images

- private images with private content replaced by the ImageNet home-mean

- private images with private content replaced by fake content

- annotations

Private Visual Information Recognition:

- 8,822 training images

- 1,374 validation images

- 3,430 test images

- Annotations: privacy category, private region (polygon)

- Python API to read and visualize the dataset

- Python evaluation code

(Un)intentional Privacy Leak Recognition:

- 2,148 training image/question pairs

- 537 testing image/question pairs

- Python API to read and visualize the dataset

- Python evaluation code

The download file is organized as follows:

- Annotations are split into three JSON files: train, validation, and test.

- APIs are provided to demonstrate how to parse the JSON files and evaluate methods against the ground truth.

- Details about each image are in the following format:

"image": "VizWiz_v2_000000031177.jpg",

"private": 1,

"private_regions": [{

"class": "Text:Computer Screen",

"polygon": [[397, 479], [535, 473], [541, 496], [403, 506]]

}, {

"class": "Text:Computer Screen",

"polygon": [[405, 552], [574, 543], [572, 574], [413, 589]]

}]

"question": "are you able to see the security on this?"

"qa_private": 1

This work is licensed under a Creative Commons Attribution 4.0 International License.

Challenge

Our proposed challenge is designed around the VizWiz-Priv dataset and addresses the following two tasks:

Tasks

Task 1: Private Visual Information Recognition

Given an image, this task is to predict if private content is present in the image. The confidence score provided by a prediction model is for ‘private’ and should be in [0,1]. We use Python’s average precision evaluation metric which computes the weighted mean of precisions under a precision-recall curve. The team that achieves the largest average precision score for the test set wins this challenge.

Task 2: (Un)intentional Privacy Leak Recognition

Given an image and a question about it, this task is to predict if the question asks about private visual information, in order to distinguish between intentional and unintentional privacy disclosures. The confidence score provided by a prediction model is for ‘qa_private’ and should be in [0,1]. We use Python’s average precision evaluation metric which computes the weighted mean of precisions under a precision-recall curve. The team that achieves the largest average precision score for the test set wins this challenge.

Code

The code for the annotation tool used to locate private information in images is at this link.

Publications

- VizWiz-Priv: A Dataset for Recognizing the Presence and Purpose of Private Visual Information in Images Taken by Blind People

Danna Gurari, Qing Li, Chi Lin, Yinan Zhao, Anhong Guo, Abigale J. Stangl, and Jeffrey P. Bigham. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.Contact Us

For questions about code, please send them to Qing Li at liqing@ucla.edu.

For other questions, comments, or feedback, please send them to Danna Gurari at danna.gurari@colorado.edu.