Answer Visual Questions from People Who Are Blind

Overview

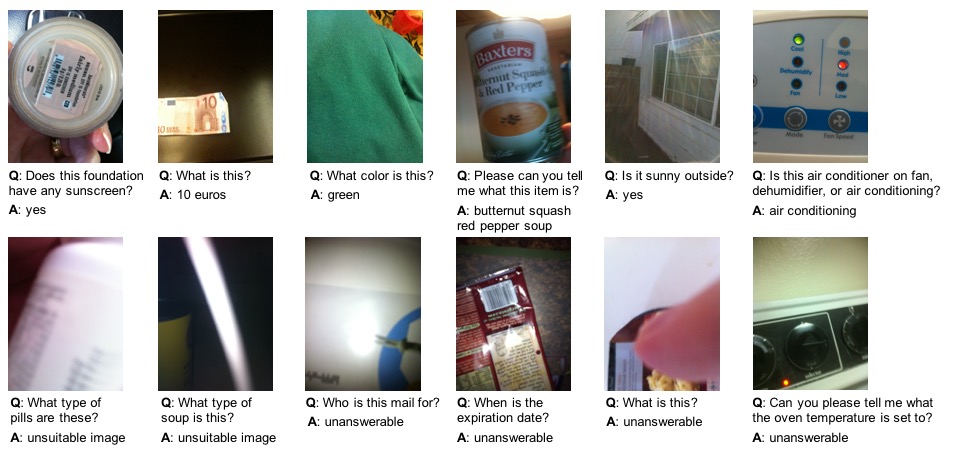

We propose an artificial intelligence challenge to design algorithms that answer visual questions asked by people who are blind. For this purpose, we introduce the visual question answering (VQA) dataset coming from this population, which we call VizWiz-VQA. It originates from a natural visual question answering setting where blind people each took an image and recorded a spoken question about it, together with 10 crowdsourced answers per visual question. Our proposed challenge addresses the following two tasks for this dataset: predict the answer to a visual question and (2) predict whether a visual question cannot be answered. Ultimately, we hope this work will educate more people about the technological needs of blind people while providing an exciting new opportunity for researchers to develop assistive technologies that eliminate accessibility barriers for blind people.

Dataset

Updated version as of January 10, 2023:

- Replaced “unsuitable” or “unsuitable image” in the answers to be “unanswerable”.

New, larger version as of January 1, 2020:

- 20,523 training image/question pairs

- 205,230 training answer/answer confidence pairs

- 4,319 validation image/question pairs

- 43,190 validation answer/answer confidence pairs

- 8,000 test image/question pairs

Deprecated version as of December 31, 2019:

- 20,000 training image/question pairs

- 200,000 training answer/answer confidence pairs

- 3,173 validation image/question pairs

- 31,730 validation answer/answer confidence pairs

- 8,000 test image/question pairs

New versus deprecated: the deprecated version has 12 digits for filenames (e.g., “VizWiz_val_000000028000.jpg”) while the new version has 8 digits for filenames (e.g., “VizWiz_val_00028000.jpg”) .

New dataset only: VizWiz_train_00023431.jpg — VizWiz_train_00023958.jpg are images which include private information that is obfuscated using the ImageNet mean. The questions do not ask about the obfuscated private information in these images.

Dataset files to download are as follows:

- Images: training, validation, and test sets

- Annotations and example code:

- Visual questions are split into three JSON files: train, validation, and test. Answers are publicly shared for the train and validation splits and hidden for the test split.

- APIs are provided to demonstrate how to parse the JSON files and evaluate methods against the ground truth.

- Details about each visual question are in the following format:

"answerable": 0,

"image": "VizWiz_val_00028000.jpg",

"question": "What is this?"

"answer_type": "unanswerable",

"answers": [

{"answer": "unanswerable", "answer_confidence": "yes"},

{"answer": "chair", "answer_confidence": "yes"},

{"answer": "unanswerable", "answer_confidence": "yes"},

{"answer": "unanswerable", "answer_confidence": "no"},

{"answer": "unanswerable", "answer_confidence": "yes"},

{"answer": "text", "answer_confidence": "maybe"},

{"answer": "unanswerable", "answer_confidence": "yes"},

{"answer": "bottle", "answer_confidence": "yes"},

{"answer": "unanswerable", "answer_confidence": "yes"},

{"answer": "unanswerable", "answer_confidence": "yes"}

]These files show two ways to assign answer-type: train.json, val.json. “answer_type” is the answer type for the most popular answer in the deprecated version and the most popular answer type for all answers’ answer types in the new version.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Challenge

Our proposed challenge is designed around the VizWiz-VQA dataset and addresses the following two tasks:

Tasks

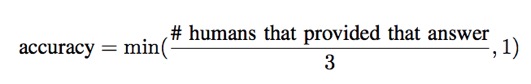

Task 1: Predict Answer to a Visual Question

Given an image and question about it, the task is to predict an accurate answer. Inspired by the VQA challenge, we use the following accuracy evaluation metric:

Following the VQA challenge, we average over all 10 choose 9 sets of human annotators. The team which achieves the maximum average accuracy for all test visual questions wins this challenge.

Task 2: Predict Answerability of a Visual Question

Given an image and question about it, the task is to predict if the visual question cannot be answered (with a confidence score in that prediction). The confidence score provided by a prediction model is for ‘answerable’ and should be in [0,1]. We use Python’s average precision evaluation metric which computes the weighted mean of precisions under a precision-recall curve. The team that achieves the largest average precision score for all test visual questions wins this challenge.

Submission Instructions

Evaluation Servers

Teams participating in the challenge must submit results for the full 2018 VizWiz-VQA test dataset (i.e., 8,000 visual questions) to our evaluation servers, which are hosted on EvalAI (challenges 2020, 2021, 2022, 2023, and 2024 are deprecated). As done for prior challenges (e.g., VQA, COCO), we created different partitions of the test dataset to support different evaluation purposes:

- Test-dev: this partition consists of 4,000 test visual questions and is available year-round. Each team can upload at most 10 submissions per day to receive evaluation results.

- Test-challenge: this partition is available for a limited duration before the Computer Vision and Pattern Recognition (CVPR) conference in June 2024 to support the challenge for the VQA task, and contains all 8,000 visual questions in the test dataset. Results on this partition will determine the challenge winners, which will be announced during the VizWiz Grand Challenge workshop hosted at CVPR. Each team can submit at most five results files over the length of the challenge and at most one result per day. The best scoring submitted result for each team will be selected as the team’s final entry for the competition.

- Test-standard: this partition is available to support algorithm evaluation year-round, and contains all 8,000 visual questions in the test dataset. Each team can submit at most five results files and at most one result per day. Each team can choose to share their results publicly or keep them private. When shared publicly, the top submission result will be published to the public leaderboard.

Uploading Submissions to Evaluation Servers

To submit results, each team will first need to create a single account on EvalAI. On the platform, then click on the “Submit” tab in EvalAI, select the submission phase (“test-dev”, “test-challenge”, or “test-standard”), select the results file (i.e., JSON file) to upload, fill in required metadata about the method, and then click “Submit”. The evaluation server may take several minutes to process the results. To have the submission results appear on the public leaderboard, when submitting to “test-standard”, check the box under “Show on Leaderboard”.

To view the status of a submission, navigate on the EvalAI platform to the “My Submissions” tab and choose the phase to which the results file was uploaded (i.e., “test-dev”, “test-challenge”, or “test-standard”). One of the following statuses should be shown: “Failed” or “Finished”. If the status is “Failed”, please check the “Stderr File” for the submission to troubleshoot. If the status is “Finished”, the evaluation successfully completed and the evaluation results can be downloaded. To do so, select “Result File” to retrieve the aggregated accuracy score for the submission phase used (i.e., “test-dev”, “test-challenge”, or “test-standard”).

The submission process is identical when submitting results to the “test-dev”, “test-challenge”, and “test-standard” evaluation servers. Therefore, we strongly recommend submitting your results first to “test-dev” to verify you understand the submission process.

Submission Results Formats

Use the following JSON formats to submit results for both challenge tasks:

Task 1: Predict Answer to a Visual Question

results = [result]

result = {

"image": string, # e.g., 'VizWiz_test_00020000.jpg'

"answer": string

}

Task 2: Predict Answerability of a Visual Question

results = [result]

result = {

"image": string, # e.g., 'VizWiz_test_00020000.jpg'

"answerable": float #confidence score, 1: answerable, 0: unanswerable

}

Leaderboards

New dataset (as of January 1, 2020): the Leaderboard pages for both tasks can be found here (Leaderboard 2020 can be found here).

Deprecated dataset (as of December 31, 2019): the Leaderboard pages for both tasks can be found here.

Rules

- Teams are allowed to use external data to train their algorithms. The only exception is teams are not allowed to use any annotations of the test dataset.

- Members of the same team are not allowed to create multiple accounts for a single project to submit more than the maximum number of submissions permitted per team on the test-challenge and test-standard datasets. The only exception is if the person is part of a team that is publishing more than one paper describing unrelated methods.

- If any unforeseen or unexpected event that cannot be reasonably anticipated or controlled affects the integrity of the Challenge, we reserve the right to cancel, change, or suspend the Challenge.

- If we have strong reason to believe that a team member cheats, we may disqualify your team, ban any team member from participating in any of our future Challenges, and notify your superiors (e.g., PhD advisor).

Code

The code for algorithm results reported in our CVPR 2018 publication is located here.

Publications

The new dataset is described in the following publications, while the deprecated version is based only on the first publication:

- VizWiz Grand Challenge: Answering Visual Questions from Blind People

Danna Gurari, Qing Li, Abigale J. Stangl, Anhong Guo, Chi Lin, Kristen Grauman, Jiebo Luo, and Jeffrey P. Bigham. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018. - VizWiz-Priv: A Dataset for Recognizing the Presence and Purpose of Private Visual Information in Images Taken by Blind People

Danna Gurari, Qing Li, Chi Lin, Yinan Zhao, Anhong Guo, Abigale J. Stangl, and Jeffrey P. Bigham. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

Contact Us

For questions about the VizWiz-VQA 2024 challenge that is part of the Visual Question Answering and Dialog workshop, please contact Everley Tseng at Yu-Yun.Tseng@colorado.edu or Chongyan Chen at chongyanchen_hci@utexas.edu.

For general questions, please review our FAQs page for answered questions and to post unanswered questions.

For questions about code, please send them to Qing Li at liqing@ucla.edu.

For other questions, comments, or feedback, please send them to Danna Gurari at danna.gurari@colorado.edu.