Identify the vision skills needed to answer visual questions

Overview

The task of answering questions about images has garnered attention as a practical service for assisting populations with visual impairments as well as a visual Turing test for the artificial intelligence community. Our first aim is to identify the common vision skills needed for both scenarios. To do so, we analyze the need for four vision skills-object recognition, text recognition, color recognition, and counting-on over 27,000 visual questions from two datasets representing both scenarios. We next quantify the difficulty of these skills for both humans and computers on both datasets. Finally, we propose a novel task of predicting what vision skills are needed to answer a question about an image. Our results reveal (mis)matches between aims of real users of such services and the focus of the AI community. We conclude with a discussion about future directions for addressing the visual question answering task.

Dataset

The VizWiz-VQA-Skills dataset annotates:

- 14,259/3,230 training images from VizWiz/MS-COCO

- 2,248/513 validation images from VizWiz/MS-COCO

- 5,719/1,291 test images from VizWiz/MS-COCO

with the number of votes, out of five crowd workers, indicating the presence of each vision skill.

The download files are organized as follows:

- Images: please refer to VizWiz, MS-COCO (2014 version)

- Annotations (CSV files):

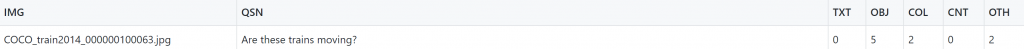

- Annotations for each image-question pair are in the following format:

Publications

The annotations are described in the following publication:

Vision Skills Needed to Answer Visual Questions

X. Zeng, Y. Wang, T. Chiu, N. Bhattacharya, and D. Gurari. Proceedings of the ACM on Human Computer Interaction (PACM HCI), 2020.

Code

Code for the baseline models in the paper: link

Contact Us

For questions, please send them to Tai-Yin Chiu at chiu.taiyin@utexas.edu.