Locate the Foreground Object

Overview

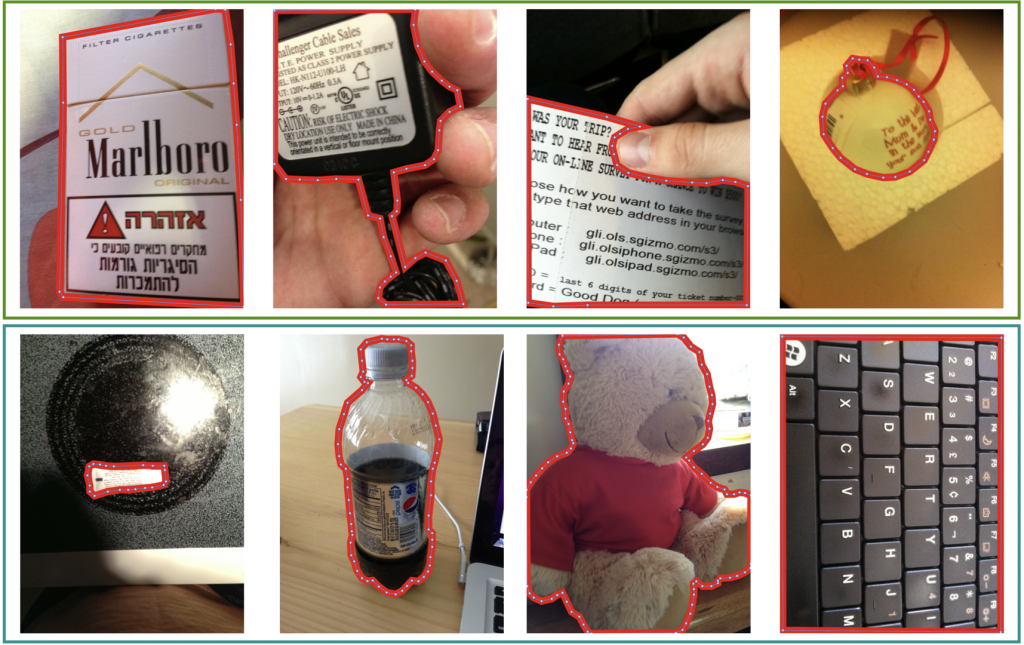

Salient object detection is the task of producing a binary mask for an image that deciphers which pixels belong to the foreground object versus the background. We introduce a new salient object detection dataset using images taken by visually impaired people seeking to better understand their surroundings, which we call VizWiz-SalientObject. Compared to seven existing datasets, VizWiz-SalientObject is the largest (i.e., 32,000 human-annotated images) and contains unique characteristics, including a higher prevalence of text in the salient objects (i.e., in 68% of images) and salient objects that occupy a larger ratio of the images (i.e., on average, ~50% coverage).

Salient object detection applications could offer several benefits to this community. For example, it could contribute to privacy preservation for photographers who rely on visual assistance technologies to learn about objects in their daily lives, using mobile phone applications such as Microsoft’s Seeing AI, Google Lookout, and TapTapSee. All content except the foreground content of interest could be obfuscated, which is important since private information is often inadvertently captured in the background of images taken by these photographers. Additionally, localization of the foreground object would empower low-vision users to rapidly magnify content of interest and enable quick inspection of smaller details.

VizWiz-SalientObject Dataset

- Images

- Annotations: [JSON files]

- 19,116 Training Examples

- 6,105 Validation Examples

- 6,779 Test Examples

- Additional metadata for all annotation responses. [Metadata]

- Each JSON annotation example uses the following format:

{

"VizWiz_train_00000004.jpg": {

"Full Screen": false,

"Total Polygons": 1,

"Ground Truth Dimensions": [500, 375],

"Salient Object": [

[

[11, 94],

[216, 102],

[208, 407],

[3, 406],

[1, 92]

]

]

}

}- This example [code] demonstrates how to load, resize, generate, and save binary masks for all images in the training dataset.

This work is licensed under a Creative Commons Attribution 4.0 International License.

Challenge

Our proposed challenge is designed around the aforementioned VizWiz-SalientObject dataset.

Task

Given an image featuring a single unambiguous foreground object (salient object), the task is to demarcate the salient object. The submissions will be evaluated based on the mean Intersection over Union (IoU) score across all test images. The team which achieves the maximum IoU score wins this challenge.

Submission Instructions

Evaluation Servers

Teams participating in the challenge must submit results for the test portion of the dataset to our evaluation servers, which are hosted on EvalAI. We created different partitions of the test dataset to support different evaluation purposes:

- Test-dev: this partition consists of 25% (1,695 examples) from the test dataset and is available year-round. Each team can upload at most ten submissions per day to receive evaluation results.

- Test-challenge: this partition is available for a limited duration before the Computer Vision and Pattern Recognition (CVPR) conference in June 2023 to support the challenge for the VizWiz-SalientObject task and contains all 6,779 examples in the test dataset. Each team can submit at most five results files over the length of the challenge and at most one result per day. The best scoring submitted result for each team will be selected as the team’s final entry for the competition.

- Test-standard: this partition is available to support algorithm evaluation year-round, and contains all 6,779 examples in the test dataset. Each team can submit at most five results files and at most one result per day. Each team can choose to share their results publicly or keep them private. When shared publicly, the best scoring submitted result will be published on the public leaderboard and will be selected as the team’s final entry for the competition.

Uploading Submissions to Evaluation Servers

To submit results, each team must create a single account on EvalAI. On the platform, then click on the “Submit” tab in EvalAI, select the submission phase (“test”), select the results file (i.e., zip file) to upload, fill in the required metadata about the method, and then click “Submit.” The evaluation server may take several minutes to process the results. To have the submission results appear on the public leaderboard, check the box under “Show on Leaderboard.”

To view the status of a submission, navigate on the EvalAI platform to the “My Submissions” tab and choose the phase to which the results file was uploaded (i.e., “test”). One of the following statuses should be shown: “Failed” or “Finished”. If the status is “Failed”, please check the “Stderr File” for the submission to troubleshoot. If the status is “Finished”, the evaluation successfully completed and the evaluation results can be downloaded. To do so, select “Result File” to retrieve the aggregated score for the submission phase used (i.e., “test”).

Submission Results Formats

Please submit a ZIP file containing all test results. Each result must be a binary mask in a PNG file format. Binary masks must be 720px by 720px to match the ground truth masks for proper training and evaluation.

Mask images are named using the scheme VizWiz_test_<image_id>.png, where <image_id> matches the corresponding VizWiz image for the mask.

Mask images are encoded as single-channel (grayscale) 8-bit PNGs (to provide lossless compression), where each pixel is either:

0: representing areas that are not the salient object.255: representing areas that are salient object

The foreground region in a mask image may be any size (including the entire image).

Leaderboards

The Leaderboard page for the challenge can be found here.

Rules

- Teams are allowed to use external data to train their algorithms. The only exception is that teams are not allowed to use any annotations of the test dataset.

- Members of the same team cannot create multiple accounts for a single project to submit more than the maximum number of submissions permitted per team on the test-challenge and test-standard datasets. The only exception is if the person is part of a team publishing more than one paper describing unrelated methods.

Publication

- Salient Object Detection for Images Taken by People With Vision Impairments

Jarek Reynolds, Chandra Kanth Nagesh, and Danna Gurari. IEEE Winter Conference on Applications of Computer Vision (WACV), 2024.

Contact Us

For any questions, comments, or feedback, please contact:

Chandra Kanth Nagesh at Chandra.Nagesh@colorado.edu

Jarek Reynolds at jare1686@colorado.edu.