Overview

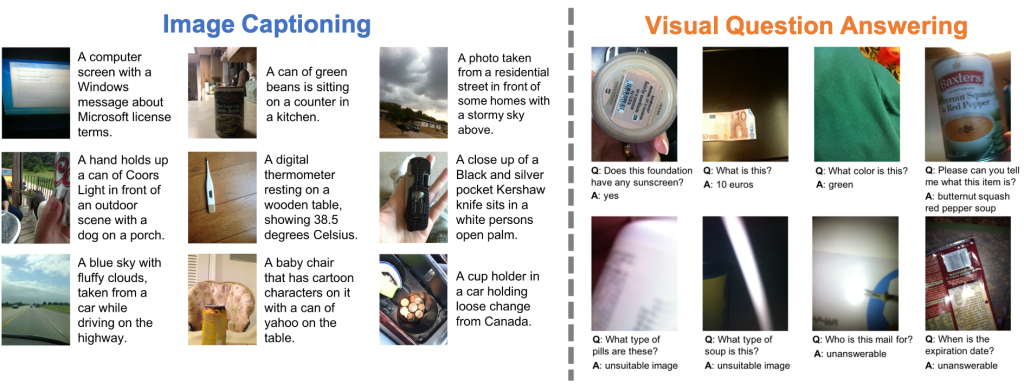

Our goal for this workshop is to educate researchers about the technological needs of people with vision impairments while empowering researchers to improve algorithms to meet these needs. A key component of this event will be to track progress on two dataset challenges, where the tasks are to answer visual questions and caption images taken by people who are blind. Winners of these challenges will receive awards sponsored by Microsoft. The second key component of this event will be a discussion about current research and application issues, including by invited speakers from both academia and industry who will share about their experiences in building today’s state-of-the-art assistive technologies as well as designing next-generation tools.

Important Dates

- Monday, February 1: challenge submissions announced

- Friday, May 21 [5:59pm Central Standard Time]: challenge submissions due

- Friday, May 21 [5:59pm Central Standard Time]: extended abstracts due

- Friday, May 28 [5:59pm Central Standard Time]: notification to authors about decisions for extended abstracts

- Saturday, June 19: all-day workshop

Submissions

We invite two types of submissions:

Challenge Submissions

We invite submissions of results from algorithms for both the image captioning challenge task and the visual question answering challenge task. We accept submissions for algorithms that are not published, currently under review, and already published. The teams with the top-performing submissions will be invited to give short talks during the workshop. The top two teams for each challenge will receive financial awards sponsored by Microsoft:

-

-

- 1rst place: $10,000 Microsoft Azure credit

- 2nd place: $5,000 Microsoft Azure credit

-

Extended Abstracts

We invite submissions of extended abstracts on topics related to image captioning, visual question answering, and assistive technologies for people with visual impairments. Papers must be at most two pages (with references) and follow the CVPR formatting guidelines using the provided author kit. Reviewing will be single-blind and accepted papers will be presented as posters. We will accept submissions on work that is not published, currently under review, and already published. There will be no proceedings. Please send your extended abstracts to workshop@vizwiz.org.

Please note that we will require all camera-ready content to be accessible via a screen reader. Given that making accessible PDFs and presentations may be a new process for some authors, we will host training sessions beforehand to both educate and assist all authors to succeed in making their content accessible. More details to come soon.

Program

Location:

Event is being held virtually.

Schedule:

All the time below are in Central Time (CT)

- 9:00-9:10am: Opening remarks

- 9:10-9:30am: Invited talk by Dhruv Batra

- 9:30-9:50am: Invited talk by Anna Rohrbach

- 9:50-10:10am: Invited talk by Cole Gleason

- 10:10-10:30am: Break

- 10:30-11:30am: Panel with blind technology advocates

- 11:30am-12:30pm: Lunch break

- 12:30-12:40pm: Overview of challenge, winner announcements, and analysis of results

- 12:40-12:50pm: Talks by top-2 teams for the VizWiz-Captions Challenge 2021

- 1st place: runner (Alibaba Group, Beihang University)

- 2nd place: Sparta117(SRC-B) (Samsung)

- 12:50-1:00pm: Talks by top-2 teams for the VizWiz-VQA Challenge 2021

- 1st place: DA_Team (Alibaba Group)

- 2nd place: HSSLAB_INSPUR (Inspur)

- 1:00-1:15pm: Poster spotlights

- 1:15-2:00pm: Poster session (Q&A in CVPR virtual platform, registration required)

- 2:00-2:30pm: Break

- 2:30-2:50pm: Invited talk by Yue-Ting Siu

- 2:50-3:10pm: Invited talk by Daniela Massiceti

- 3:10-3:30pm: Invited talk by Joshua Miele

- 3:30-3:45pm: Break

- 3:45-4:45pm: Panel with invited speakers

- 4:45-4:55pm: Open discussion

- 4:55-5:00pm: Closing remarks

Invited Speakers and Panelists:

Poster List

- An Improved Feature Extraction Approach to Image Captioning for Visually Impaired People

Dong Wook Kim, Joon gwon Hwang, Sang Hyeok Lim, Sang Hun Lee

- Cross-Attention with Self-Attention for VizWiz VQA

Rachana Jayaram, Shreya Maheshwari, Hemanth C, Sathvik N Jois, Dr. Mamatha H.R.

- Data augmentation to improve robustness of image captioning solutions

Shashank Bujimalla, Mahesh Subedar, Omesh Tickoo

- Dealing with Missing Modalities in the Visual Question Answer-Difference Prediction Task through Knowledge Distillation

Jae Won Cho, Dong-Jin Kim, Jinsoo Choi, Yunjae Jung, In So Kweon

- Enhancing Textual Cues in Multi-modal Transformers for VQA

Yu Liu, Lianghua Huang, Liuyihang Song, Bin Wang, Yingya Zhang, Pan Pan

- Live Photos: Mitigating the Impacts of Low-Quality Images in VQA

Lauren Olson, Chandra Kambhamettu, Kathleen McCoy

- Multiple Transformer Mining for VizWiz Image Caption

Xuchao Gong, Hongji Zhu, Yongliang Wang, Biaolong Chen, Aixi Zhang, Fangxun Shu, Si Liu

- Deep Co-Attention Model for Challenging Visual Question Answering on VizWiz

Wentao Mo, Yang Liu

- Two-stage Refinements for Vizwiz-VQA

Runze Zhang, Xiaochuan Li, Baoyu Fan, Zhenhua Guo, Yaqian Zhao, Rengang Li

Organizers

Danna Gurari

University of Texas at Austin

Jeffrey Bigham

Carnegie Mellon University, Apple

Meredith Morris

Google

Ed Cutrell

Microsoft

Abigale Stangl

University of Washington

Yinan Zhao

University of Texas at Austin

Samreen Anjum

University of Texas at Austin

Contact Us

For questions, comments, or feedback, please send them to Danna Gurari at danna.gurari@colorado.edu.