Overview

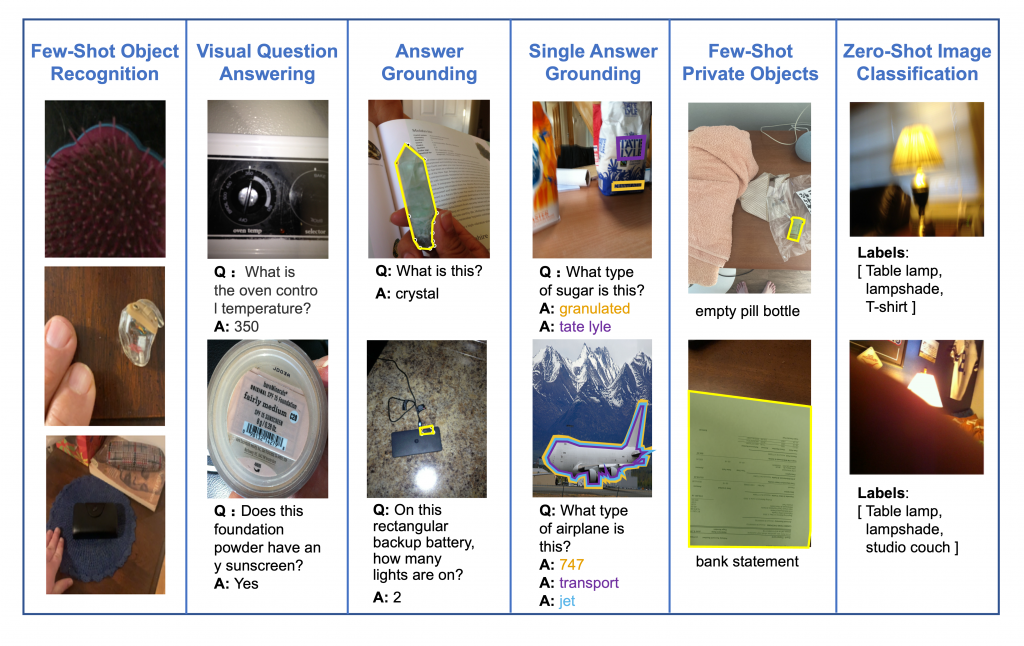

Our goal for this workshop is to educate researchers about the technological needs of people with vision impairments while empowering researchers to improve algorithms to meet these needs. A key component of this event will be to track progress on six dataset challenges, where the tasks are to answer visual questions, ground answers, recognize visual questions with multiple answer groundings, recognize objects in few-shot learning scenarios, locate objects in few-shot learning scenarios, and classify images in a zero-shot setting. The second key component of this event will be a discussion about current research and application issues, including invited speakers from both academia and industry who will share their experiences in building today’s state-of-the-art assistive technologies as well as designing next-generation tools.

Important Dates

- Thursday, January 11: challenge submissions announced

- Friday, January 12 [9:00 AM Central Standard Time]: challenges go live

- Friday, May 3 [9:00 AM Central Standard Time]: challenge submissions due

- Friday, May 10 [9:00 AM Central Standard Time]: extended abstracts due

- Friday, May 17 [5:59 PM Central Standard Time]: notification to authors about decisions for extended abstracts

- Tuesday, June 18: Half-day workshop

Submissions

We invite two types of submissions:

Challenge Submissions

We invite submissions about algorithms for the following six challenge tasks: visual question answering, answer grounding, single answer grounding recognition, few-shot video object recognition, few-shot private object localization, and zero-shot image classification. We accept submissions for algorithms that are not published, currently under review, and already published.

The teams with the top-performing submissions will be invited to give short talks during the workshop.

Extended Abstracts

We invite submissions of extended abstracts on topics related to all challenge tasks as well as assistive technologies for people with visual impairments. Papers must be at most two pages (with references) and follow the CVPR formatting guidelines using the provided author kit. Reviewing will be single-blind and accepted papers will be presented as posters. We will accept submissions on work that is not published, currently under review, and already published. There will be no proceedings. Please send your extended abstracts to workshop@vizwiz.org.

Please note that we will require all camera-ready content to be accessible via a screen reader. Given that making accessible PDFs and presentations may be a new process for some authors, we will host training sessions beforehand to both educate and assist all authors to succeed in making their content accessible.

Challenge Results

- Visual Question Answering

1st place: SLCV from Shopline AI Research (Baojun Li, Jiamian Huang, and Tao Liu)

2nd place: v1olet

3rd place: KNU-HomerunBall - Visual Question Answering Grounding

1st place: MGTV from Mango TV (Kang Zhang, Yi Yu, Shien Song, Haibo Lu, Jie Yang, Yangke Huang and Hongrui Jin)

2nd place: ByteDo

3rd place: UD VIMS Lab - VQA-AnswerTherapy

1st place: MGTV from Mango TV (Yi Yu, Kang Zhang, Shien Song, Haibo Lu, Jie Yang, Yangke Huang, Hongrui Jin)

2nd place: SLCV

3rd place: SIAI - ORBIT

1st place: ECO AI from SK ecoplant, South Korea (Hyunhak Shin, Yongkeun Yun, Dohyung Kim, Jihoon Seo, and Kyusam Oh) - BIV-Priv

1st place: tele-ai-a from China Telecom Artificial Intelligence Technology (Beijing) Co. and Xi’an Jiaotong University (Rongbao Han, Zihao Guo, Jin Wang, Tianyuan Song, Hao Yang, Jinglin Yang, and Hao Sun)

2nd place: ByteDo - Zero-Shot VizWiz-Classification

1st place: HBNUE from Hanbat National University (Huiwon Gwon, Sunhee Jo, Hyejeong Jo, and Chanho Jung)

2nd place: DoubleZW

3rd place: TeleCVG

Program

Location:

Summit 435, Seattle Convention Center [map]

Address: 900 Pine Street Seattle, WA 98101-2310

Schedule:

- 8:00-8:15 am: Opening remarks

- 8:15-8:30 am: Overview of three challenges related to VQA (VQA, Answer Grounding, Single Answer Grounding Recognition), winner announcements, and talks by challenge winners

- 8:30-9:00 am: Invited talk and Q&A with computer vision researcher (Soravit Beer Changpinyo)

- Talk title: “Toward Vision and Richer Language(s)”

- 9:00-9:30 am: Invited talk and Q&A with OpenAI representatives (Raul Puri and Rowan Zellers)

- Talk title: “Challenges in Deploying Omnimodels and Assistive Technology: Where we are and what’s next?”

- 9:30-9:45 am: Poster spotlight talks

- 9:45-10:30 am: Poster session (at Arch Building Exhibit Hall) and break

- 10:30-10:45 am: Overview of three zero-shot and few-shot learning challenges (few-shot video object recognition, few-shot private object localization, zero-shot classification), winner announcements, and talk by challenge winner

- 10:45-11:15 am: Invited talk and Q&A with blind comedian and writer (Brian Fischler)

- Talk title: “Will Computer Vision and A.I. Revolutionize the Web for People Who Are Blind?”

- 11:15-11:45 am: Invited talk and Q&A with linguistics expert, Elisa Kreiss

- Talk title: “How Communicative Principles (Should) Shape Human-Centered AI for Nonvisual Accessibility”

- 11:45-12:15 pm: Open Q&A panel with five invited speakers

- 12:15-12:20 pm: Closing remarks

Invited Speakers and Panelists:

Raul Puri

Deep Learning Researcher

OpenAI

Poster List

- Integrating Query-aware Segmentation and Cross-Attention for Robust VQA

Wonjun Choi, Sangbeom Lee, Seungyeon Lee, Heechul Jung, and Dong-Gyu Lee

Paper - Leveraging Large Vision-Language Models for Visual Question Answering in VizWiz Grand Challenge

Bao-Hiep Le , Trong-Hieu Nguyen-Mau, Dang-Khoa Nguyen-Vu , Vinh-Phat Ho-Ngoc , Hai-Dang Nguyen , and Minh-Triet Tran

Paper - Visual Question Answering with Multimodal Learning for VizWiz-VQA

Heegwang Kim, Chanyeong Park, Junbo Jang, Jiyoon Lee, Jaehong Yoon, and Joonki Paik

Paper - Shifted Reality: Navigating Altered Visual Inputs with Multimodal LLMs

Yuvanshu Agarwal and Peya Mowar

Paper - The Manga Whisperer: Making Comics Accessible to Everyone

Ragav Sachdeva and Andrew Zisserman

Paper - Vision-Language Model-based PolyFormer for Recognizing Visual Questions with Multiple Answer Groundings

Dai Quoc Tran, Armstrong Aboaj, Yuntae Jeon, Minsoo Park, and Seunghee Park

Paper - Technical Report for CVPR 2024 VizWiz Challenge Track 1-Predict Answer to a Visual Question

Jinming Chai, Qin Ma, Kexin Zhang, Zhongjian Huang, Licheng Jiao, and Xu Liu

Paper - Refining Pseudo Labels for Robust Test Time Adaptation

Huiwon Gwon, Sunhee Jo, Heajeong Jo, and Chanho Jung

Paper - A Zero-Shot Classification Method Based on Image Enhancement and Multimodal Model Fusion

Jiamin Cao, Lingqi Wang, Yujie Shang, Lingling Li, Fang Liu, and Wenping Ma

Paper - Few-Shot Private Object Localization via Support Token Matching

Junwen Pan, Dawei Lu, Xin Wan, Rui Zhang, Kai Huang, and Qi She

Paper - Distilled Mobile ViT for VizWiz Few-Shot Challenge 2024

Hyunhak Shin, Yongkeun Yun, Dohyung Kim, Jihoon Seo, and Kyusam Oh

Paper - Propose, Match, then Vote: Enhancing Robustness for Zero-shot Image Classification via Cross-modal Understanding

Jialong Zuo, Hanyu Zhou, Dongyue Wu, Wenxiao Wu, Changxin Gao, and Nong Sang

Paper

Organizers

Danna Gurari

University of Colorado Boulder

Jeffrey Bigham

Carnegie Mellon University, Apple

Ed Cutrell

Microsoft

Chongyan Chen

University of Texas at Austin

Contact Us

For questions, comments, or feedback, please send them to Danna Gurari at danna.gurari@colorado.edu.

Sponsor