Overview

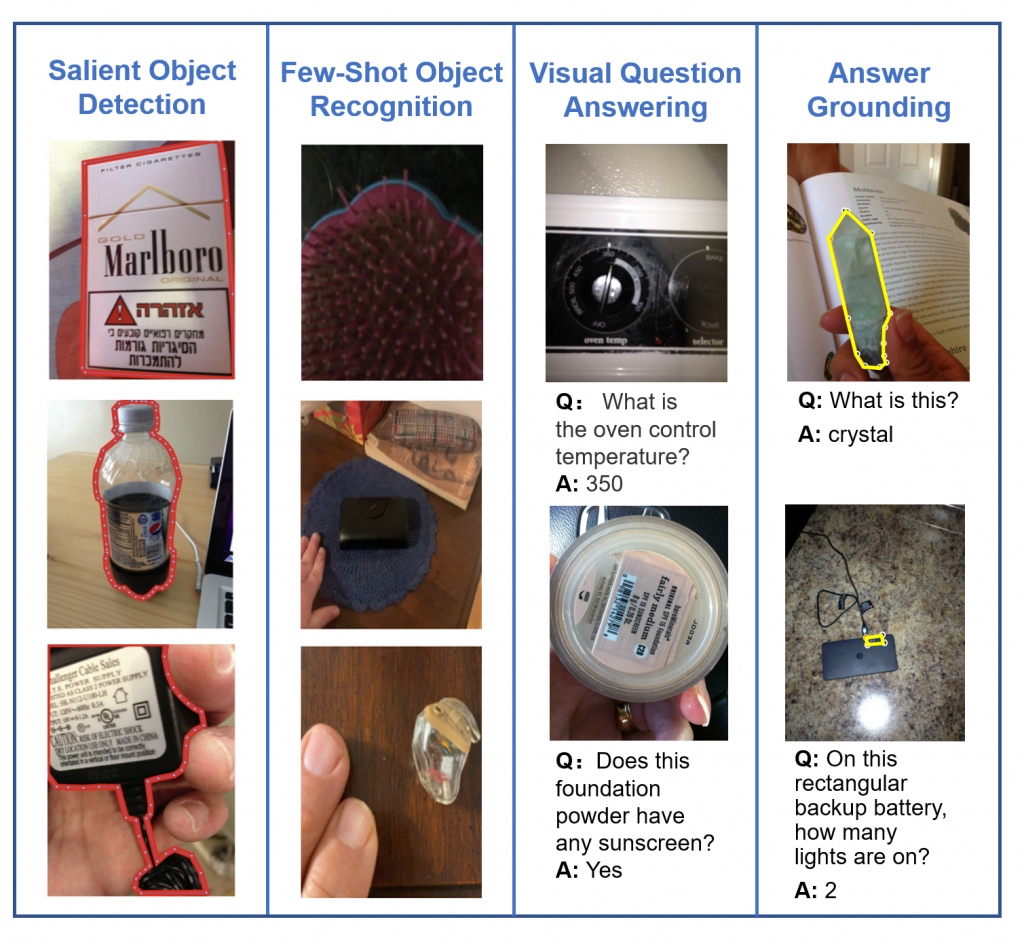

Our goal for this workshop is to educate researchers about the technological needs of people with vision impairments while empowering researchers to improve algorithms to meet these needs. A key component of this event will be to track progress on four dataset challenges, where the tasks are to answer visual questions, ground answers, detect salient objects, and recognize objects in few-shot learning scenarios. The second key component of this event will be a discussion about current research and application issues, including invited speakers from both academia and industry who will share their experiences in building today’s state-of-the-art assistive technologies as well as designing next-generation tools.

Important Dates

- Thursday, January 12: challenge submissions announced

- Friday, January 13 [9:00am Central Standard Time]: challenges go live

- Friday, May 5 [9:00am Central Standard Time]: challenge submissions due

- Friday, May 12 [9:00am Central Standard Time]: extended abstracts due

- Wednesday, May 17 [5:59pm Central Standard Time]: notification to authors about decisions for extended abstracts

- Monday, June 19: half-day workshop.

This year, June 19 marks Juneteenth, a US holiday commemorating the end of slavery in the US. We encourage attendees to learn more about Juneteenth and its historical context, and to join in celebrating the Juneteenth holiday. You can find out more information about Juneteenth here: https://www.nytimes.com/article/juneteenth-day-celebration.html

Submissions

We invite two types of submissions:

Challenge Submissions

We invite submissions about algorithms for the four challenge tasks: the visual question answering challenge task, the answer grounding challenge, the salient object detection challenge, and the few-shot object recognition challenge. We accept submissions for algorithms that are not published, currently under review, and already published.

The teams with the top-performing submissions will be invited to give short talks during the workshop. Microsoft Research will host the winning teams across all 4 challenges at a virtual event in July 2023. Teams will be invited to present their approaches to a wide audience of internal research and product teams working in machine learning and computer vision, a great opportunity to gain industry visibility for future job applicants.

Extended Abstracts

We invite submissions of extended abstracts on topics related to image captioning, visual question answering, visual grounding, salient object detection, few shot learning, and assistive technologies for people with visual impairments. Papers must be at most two pages (with references) and follow the CVPR formatting guidelines using the provided author kit. Reviewing will be single-blind and accepted papers will be presented as posters. We will accept submissions on work that is not published, currently under review, and already published. There will be no proceedings. Please send your extended abstracts to workshop@vizwiz.org.

Please note that we will require all camera-ready content to be accessible via a screen reader. Given that making accessible PDFs and presentations may be a new process for some authors, we will host training sessions beforehand to both educate and assist all authors to succeed in making their content accessible.

Program

Location:

Event is being held in a hybrid manner. For in-person attendance, the location is at the Vancouver Convention Center in Room: West 210. For virtual attendance, a Zoom link can be found on the virtual CVPR platform.

Schedule:

- 8:15-8:20: Opening remarks (video)

- 8:20-8:50: Invited talk and QA with computer vision researcher (Xin (Eric) Wang). (video)

- Talk title: “Building Generalizable, Scalable, and Trustworthy Multimodal Embodied Agents”

- 8:50-9:00: Overview of VQA challenges, winner announcements, analysis of results, and talks by challenge winners (video)

- 9:00-9:15: Overview of VQA grounding challenges, winner announcements, analysis of results, and talks by challenge winners (video)

- 9:15-9:45: Invited talk and QA with Google Lookout representative (Haoran Qi). (video)

- Talk title: “Building an App for Blind and Low Vision — Challenges and Opportunities”

- 9:45-10:00: Overview of few-shot object recognition challenge, winner announcements, analysis of results, and talk by challenge winner (video)

- 10:00-10:15: Break

- 10:15-10:45: Invited talk and QA with blind technology advocate (Thomas Reid). (video)

- Talk title: “When AI is Access & Independence”

- 10:45-11:00: Overview of salient object detection challenge, winner announcements, analysis of results, and talk by challenge winner (video)

- 11:00-11:25: Open QA panel with three invited speakers (audio)

- 11:25-11:30: Open discussion and closing remarks (video)

- 11:30-12:00: Poster session

Invited Speakers and Panelists:

Xin (Eric) Wang

Assistant Professor

University of

California, Santa Cruz

Thomas Reid

Audio Producer

“Reid My Mind”

Haoran Qi

Software Engineer

Google Lookout

Poster List

- Embedding Attention Blocks for the VizWiz Answer Grounding Challenge

Seyedalireza Khoshsirat, Chandra Kambhamettu

Paper - Learning Saliency Map From Transformer and Depth

Chenmao Li, Wei Ming, Qiaozhong Huang, Jiamao Li, Dongchen Zhu, Lei Wang

Paper - Advancing Visual Understanding and Accessibility for All: Image Captioning for Low Vision

Nevasini Sasikumar and Krishna Sri Ipsit Mantri - AutoAD: Movie Description in Context

Tengda Han, Max Bain, Arsha Nagrani, Gul Varol, Weidi Xie, Andrew Zisserman

Organizers

Danna Gurari

University of Colorado Boulder

Jeffrey Bigham

Carnegie Mellon University, Apple

Ed Cutrell

Microsoft

Daniela Massiceti

Microsoft

Samreen Anjum

University of Colorado Boulder

Contact Us

For questions, comments, or feedback, please send them to Danna Gurari at danna.gurari@colorado.edu.

Sponsor