Overview

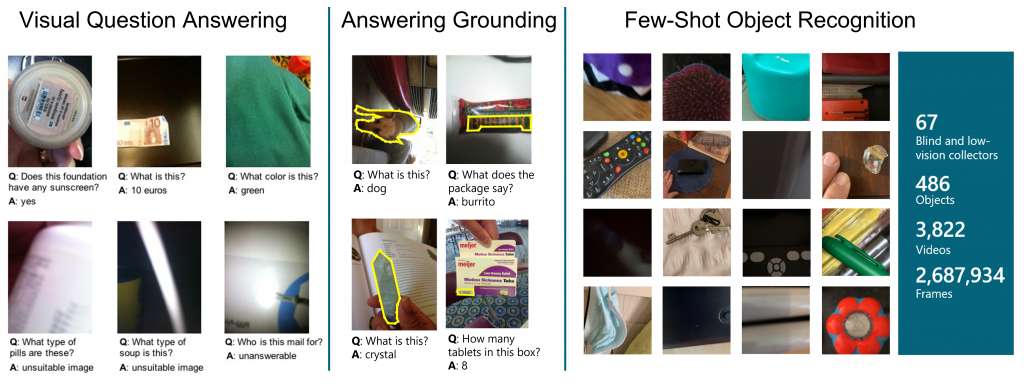

Our goal for this workshop is to educate researchers about the technological needs of people with vision impairments while empowering researchers to improve algorithms to meet these needs. A key component of this event will be to track progress on three dataset challenges, where the tasks are to answer visual questions and ground answers on images taken by people who are blind, and recognize objects in few-shot learning scenarios. Winners of these challenges will receive awards sponsored by Microsoft. The second key component of this event will be a discussion about current research and application issues, including by invited speakers from both academia and industry who will share about their experiences in building today’s state-of-the-art assistive technologies as well as designing next-generation tools.

Important Dates

- Monday, February 7: challenge submissions announced

- Friday, May 6 [9:00am Central Standard Time]: challenge submissions due

- Saturday, May 21 [5:59pm Central Standard Time]: extended abstracts due

- Friday, May 27 [5:59pm Central Standard Time]: notification to authors about decisions for extended abstracts

- Monday, June 20: all-day workshop

This year, June 19 and 20 marks Juneteenth, a US holiday commemorating the end of slavery in the US, and a holiday of special significance in the US South. We encourage attendees to learn more about Juneteenth and its historical context, and to join the city of New Orleans in celebrating the Juneteenth holiday. You can find out more information about Juneteenth here: https://cvpr2022.thecvf.com/recognizing-juneteenth

Submissions

We invite two types of submissions:

Challenge Submissions

We invite submissions of results from algorithms for three tasks: the visual question answering challenge task, the answer grounding challenge and the few-shot object recognition challenge. We accept submissions for algorithms that are not published, currently under review, and already published. The teams with the top-performing submissions will be invited to give short talks during the workshop. The top two teams for the visual question answering and answer grounding challenges will receive financial awards sponsored by Microsoft:

-

-

- 1rst place: $10,000 Microsoft Azure credit

- 2nd place: $5,000 Microsoft Azure credit

-

Extended Abstracts

We invite submissions of extended abstracts on topics related to image captioning, visual question answering, visual grounding and assistive technologies for people with visual impairments. Papers must be at most two pages (with references) and follow the CVPR formatting guidelines using the provided author kit. Reviewing will be single-blind and accepted papers will be presented as posters. We will accept submissions on work that is not published, currently under review, and already published. There will be no proceedings. Please send your extended abstracts to workshop@vizwiz.org.

Please note that we will require all camera-ready content to be accessible via a screen reader. Given that making accessible PDFs and presentations may be a new process for some authors, we will host training sessions beforehand to both educate and assist all authors to succeed in making their content accessible.

Program

Location:

Event is being held in a hybrid manner. The portion before lunch will be held in-person (New Orleans Ernest N. Morial Convention Center; Room #236). The portion after lunch will be live-streamed at the following URL: https://vizwiz.org/video.

Schedule:

- 9:00-9:05: opening remarks (video)

- 9:05-9:20: announcement of challenge winners

- 9:20-9:35: VizWiz-VQA challenge winner talks

- 9:35-9:50: VizWiz-VQA-Grounding challenge winner talks

- 9:50-10:05: Few-shot object recognition challenge winner talks

- 10:05-10:15: poster spotlight talks

- 10:15-11:00: poster session

- 11:00-11:30: lunch break

- 11:30-12:25: panel of blind technology advocates (Stephanie Enyart, Robin Christopherson, and Daniel Kish) (video) (transcript)

- 12:30-1:25: panel of industry representatives (Saqib Shaikh, Will Butler, Karthik Kannan, and Anne Taylor) (video) (transcript)

- 1:30-2:25: panel of computer vision researchers (Marcus Rohrbach, Andrew Howard, and James Coughlan) (video) (transcript)

- 2:30-3:25: interdisciplinary panel (Stephanie Enyart, Karthik Kannan, and James Coughlan) (video) (transcript)

- 3:30-4:15: interdisciplinary panel (Will Butler, Andrew Howard, and Daniel Kish) (video) (transcript)

- 4:20-5:00: interdisciplinary panel (Saqib Shaikh, Robin Christopherson, and Marcus Rohrbach) (video) (transcript)

Podcast:

The panel discussions are also available as a podcast and can be accessed on Spotify at the following URL: https://open.spotify.com/show/7AsiLuLq1Ay7QMBOUJNHfu

Invited Speakers and Panelists:

Marcus Rohrbach

Meta AI

Stephanie Enyart

Chief Public Policy & Research Officer, American Foundation for the Blind

Andrew Howard

Google AI

Anne Taylor

Principal Program Manager, Microsoft

James Coughlan

Senior Scientist/ Coughlan Lab Director, Smith-Kettlewell

Robin Christopherson

Head of Digital Inclusion, AbilityNet

Daniel Kish

President, World Access for the Blind

Karthik Kannan

Founder and Chief Technology Officer, Envision

Poster List

- Answer-Me: Multi-Task Open-Vocabulary Learning for Visual Question Answering

AJ Piergiovanni, Wei Li, Weicheng Kuo, Mohammad Saffar, Fred Bertsch, and Anelia Angelova

paper | video - Anomaly Detection for Visually Impaired People Using A 360 Degree Wearable Camera

Dong-in Kim, and Jangwon Lee

paper | poster | video - Less Is More: Linear Layers on CLIP Features as Powerful VizWiz Model

Fabian Deuser, Konrad Habel, Philipp J. Rosch, and Norbert Oswald

paper | poster | video - Photometric Enhancements to Improve Recognizability of Image Content

Lauren Olson, Chandra Kambhamettu, and Kathleen McCoy

paper | poster | video - Improving Descriptive Deficiencies with a Random Selection Loop for 3D Dense Captioning based on Point Clouds

Shinko Hayashi, Zhiqiang Zhang, and Jinja Zhou

paper | video - Tell Me the Evidence? Dual Visual-Linguistic Interaction for Answer Grounding

Junwen Pan, Guanlin Chen, Yi Liu, Jiexiang Wang, Cheng Bian, Pengfei Zhu, Zhicheng Zhang

paper | video - An End-to-end Vision-language Pre-Trainer for VizWiz-VQA

Dongze Hao, Yonghua Pan, Fei Liu, Tongtian Yue, Xinxin Zhu, and Jing Liu

poster - Question-Aware Vision Transformer for VQA Grounding Segmentation

Zhenduo Zhang, Jingyu Liu, Sheng Chen

poster - Answer Anchors for VizWiz Answer Grounding

Rengang, Yaqian, Hongwei, Zhenhua, Baoyu, Runze, Xiaochuan

poster

Organizers

Danna Gurari

University of Colorado Boulder

Jeffrey Bigham

Carnegie Mellon University, Apple

Ed Cutrell

Microsoft

Daniela Massiceti

Microsoft

Abigale Stangl

University of Washington

Samreen Anjum

University of Colorado Boulder

Contact Us

For questions, comments, or feedback, please send them to Danna Gurari at danna.gurari@colorado.edu.

Sponsors